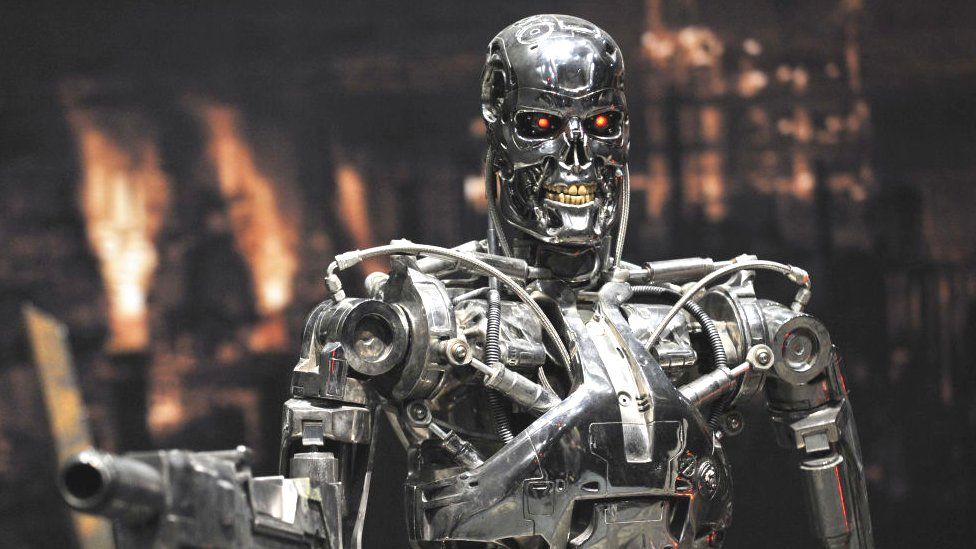

Terminate the Terminator references a minister suggests

By Chris Vallance

Technology reporter

Tech minister Paul Scully has warned so-called “Terminator-style” risks to humanity from artificial intelligence (AI) should not be highlighted at the expense of the good it can do.

The Terminator sci-fi film franchise envisages a malevolent AI “Skynet” system bent on humanity’s destruction.

Prime Minister Rishi Sunak is about to travel to the US where AI is one of the items he will be discussing.

AI describes the ability of computers to perform tasks typically requiring human intelligence.

When it came to AI, there was a “dystopian point of view that we can follow here. There’s also a utopian point of view. Both can be possible”, Mr Scully told the TechUK Tech Policy Leadership Conference in Westminster.

A dystopia is an imaginary place in which everything is as bad as possible.

“If you’re only talking about the end of humanity because of some, rogue, Terminator-style scenario, you’re going to miss out on all of the good that AI is already functioning – how it’s mapping proteins to help us with medical research, how it’s helping us with climate change.

“All of those things it’s already doing and will only get better at doing.”

The government recently put out a policy document on regulating AI which was criticised for not establishing a dedicated watchdog, and some think additional measures may eventually needed to deal with the most powerful future systems .

Marc Warner, a member of the AI Council, an expert body set up to advise the government, told BBC News last week a ban on the most powerful AI may be necessary.

However, he argued that “narrow AI” designed for particular tasks, such as systems that look for cancer in medical images, should be regulated on the same basis as existing tech.

Responding to reports on the possible dangers posed by AI, the prime minister’s spokesperson said: “We are not complacent about the potential risks of AI, but it also provides significant opportunities.

“We can not proceed with AI without the guard rails in place.”

‘Fear parade’

Labour’s shadow culture secretary Lucy Powell told the BBC that while there was a “level of hysteria going on and that’s certainly dominating the public debate at the moment, there are real opportunities with the development of a technology like AI”.

However, she added: “But we do have to think really carefully about the risks, make sure we’ve got good regulation in place.”

It was also important that everyone benefited from the impact of AI and it “doesn’t just go to the big tech giants in the US as happened in the last technological revolution”.

Ms Powell earlier told the Guardian she felt AI should be licensed in a similar way to medicines or nuclear power, both of which had dedicated regulators.

AI company OpenAI recently blogged that a global regulator like the International Atomic Energy Authority might be needed for super-intelligent AI.

At the same event, Microsoft president Brad Smith said the most powerful AIs may need safety licences to operate.

“Before a model can be deployed it will have to pass some some kind of safety review.”

Mr Smith argued it would be better if there was international co-operation and a single model of regulation. He said that when it came to cyber and national security, the UK and US were well placed to work together.

He told reporters at the event that Microsoft would not join “the fear parade”, adding it would be better to reduce some of the rhetoric and focus more on current problems.

A number of other experts have also said focusing on sci-fi-like disaster scenarios is a distraction from current issues with AI, such as the risk of racial or gender biases in algorithms.