By Shiona McCallum

Technology reporter

Seven leading companies in artificial intelligence have committed to managing risks posed by the tech, the White House has said.

This will include testing the security of AI, and making the results of those tests public.

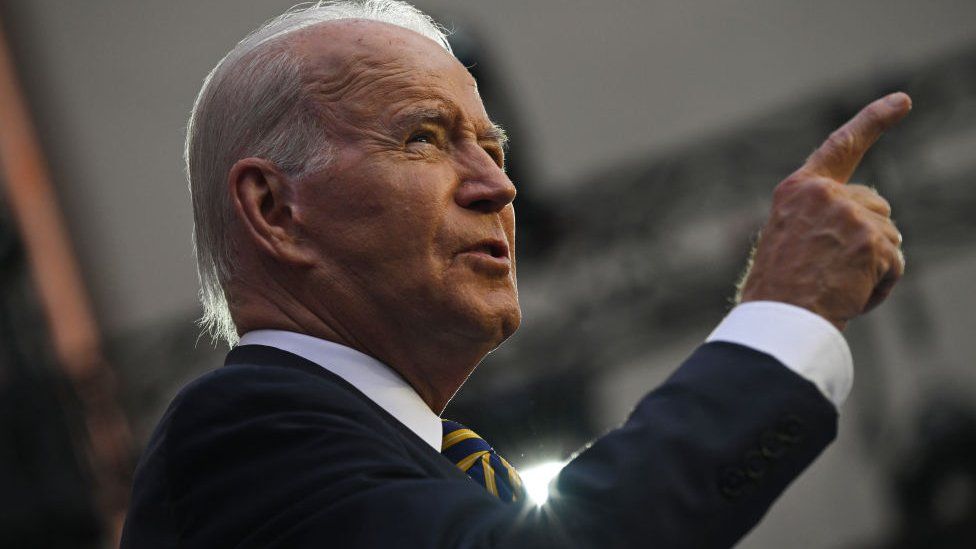

Representatives from Amazon, Anthropic, Google, Inflection, Meta, Microsoft, and OpenAI joined US President Joe Biden to make the announcement.

It follows a number of warnings about the capabilities of the technology.

The pace at which the companies have been developing their tools have prompted fears over the spread of disinformation, especially in the run up to the 2024 US presidential election.

“We must be clear-eyed and vigilant about the threats emerging from emerging technologies that can pose – don’t have to but can pose – to our democracy and our values,” President Joe Biden said during remarks on Friday.

On Wednesday, Meta, Facebook’s parent company, announced its own AI tool called Llama 2.

Sir Nick Clegg, president of global affairs at Meta, told the BBC the “hype has somewhat run ahead of the technology”.

As part of the agreement signed on Friday, the companies agreed to:

- Security testing of their AI systems by internal and external experts before their release.

- Ensuring that people are able to spot AI by implementing watermarks.

- Publicly reporting AI capabilities and limitations on a regular basis.

- Researching the risks such as bias, discrimination and the invasion of privacy.

The goal is for it to be easy for people to tell when online content is created by AI, the White House added.

“This is a serious responsibility, we have to get it right,” Mr Biden said. “And there’s enormous, enormous potential upside as well.”

Watermarks for AI-generated content were among topics EU commissioner Thierry Breton discussed with OpenAI chief executive Sam Altman during a June visit to San Francisco.

“Looking forward to pursuing our discussions – notably on watermarking,” Breton wrote in a tweet that included a video snippet of him and Mr Altman.

Watch: What threats does AI pose?

In the video clip Mr Altman said he “would love to show” what OpenAI was doing with watermarks “very soon.”

The voluntary safeguards signed on Friday are a step towards more robust regulation around AI in the US.

The administration is also working on an executive order, it said in a statement.

The White House said it would also work with allies to establish an international framework to govern the development and use of AI.

Warnings abut the technology include that it could be used to generate misinformation and destabilise society, and even that it could pose an existential risk to humanity – although some ground-breaking computer scientists have said apocalyptic warnings are overblown.